Artificial intelligence (AI) has the potential to augment medical diagnosis based on patient images. However, physician-machine partnerships — the use of AI systems as decision support for physicians — are not guaranteed to be superior to either physicians or machines alone in diagnosing disease, as both are susceptible to systematic errors, especially for diagnosis of under-represented populations1,2,3. The effectiveness of physician-machine partnerships depends on the physicians’ ability to correctly incorporate or ignore AI suggestions, which further depends on physician expertise, AI system performance, and physician understanding of when an AI system is prone to errant suggestions. In a store-and-forward teledermatology setting, we examined how diagnostic decisions made by physician-machine partnerships compared with those made by physicians alone.

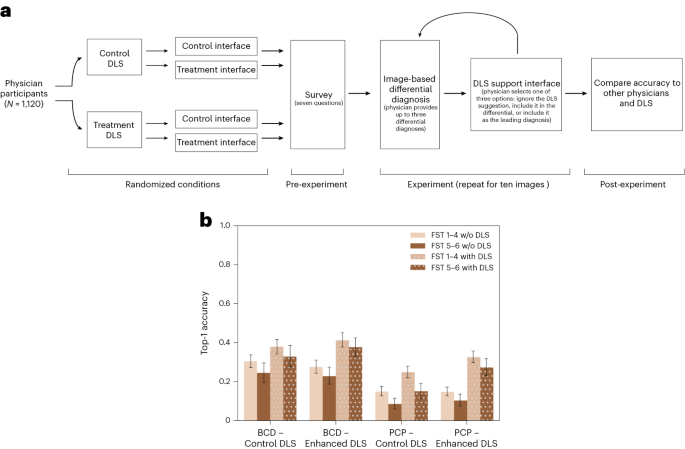

We recruited 1,118 physicians, including dermatologists, residents, primary care physicians and other physicians, and asked them to diagnose skin diseases on the basis of images displayed on an interactive website (Fig. 1a). In total, we collected 14,261 differential diagnoses on 364 clinical images of skin disease. The collection of many observations in a controlled setting gave us the statistical power to evaluate differences in diagnostic accuracy across several important dimensions: patient skin tone, physician expertise, skin disease type, AI assistance accuracy, and interface design. The AI assistance is based on the training of a deep neural network on clinical images of skin diseases, and the assistance interface asks physicians to either incorporate or ignore the suggestion offered by the AI system. This integrative experimental design is straightforward to replicate and promotes commensurability by revealing the diagnostic accuracy of physicians under a variety of conditions that can inform clinical practice.

We found that the diagnosis of inflammatory-appearing skin disease on the basis of a single image and up to three free-response answers (instead of multiple-choice answers) was challenging for specialists and generalists alike but was significantly improved with access to AI assistance. Leading diagnoses from specialists and generalists were correct in 27% and 13% of observations, respectively, and the accuracy of their top three differential diagnoses was 38% and 19%, respectively. With access to AI assistance and the opportunity for physicians to swap their leading diagnosis with the AI suggestion, physician performance increased significantly from 27% to 36% for specialists and from 13% to 22% for generalists. When the AI system made an incorrect suggestion, we found that physician accuracy decreased by 1.2 percentage points, which was not statistically significant. These results reveal that AI assistance has the potential to significantly increase the diagnostic accuracy of physicians with minimal misdiagnoses. However, we found that physicians were 4 percentage points less accurate on dark skin tones than on light skin tones, and AI assistance exacerbated the accuracy disparities by generalists by 5 percentage points, which is statistically significant. These results (Fig. 1b) illustrate that success in improving overall diagnostic accuracy does not necessarily address bias in accuracy across skin tones.